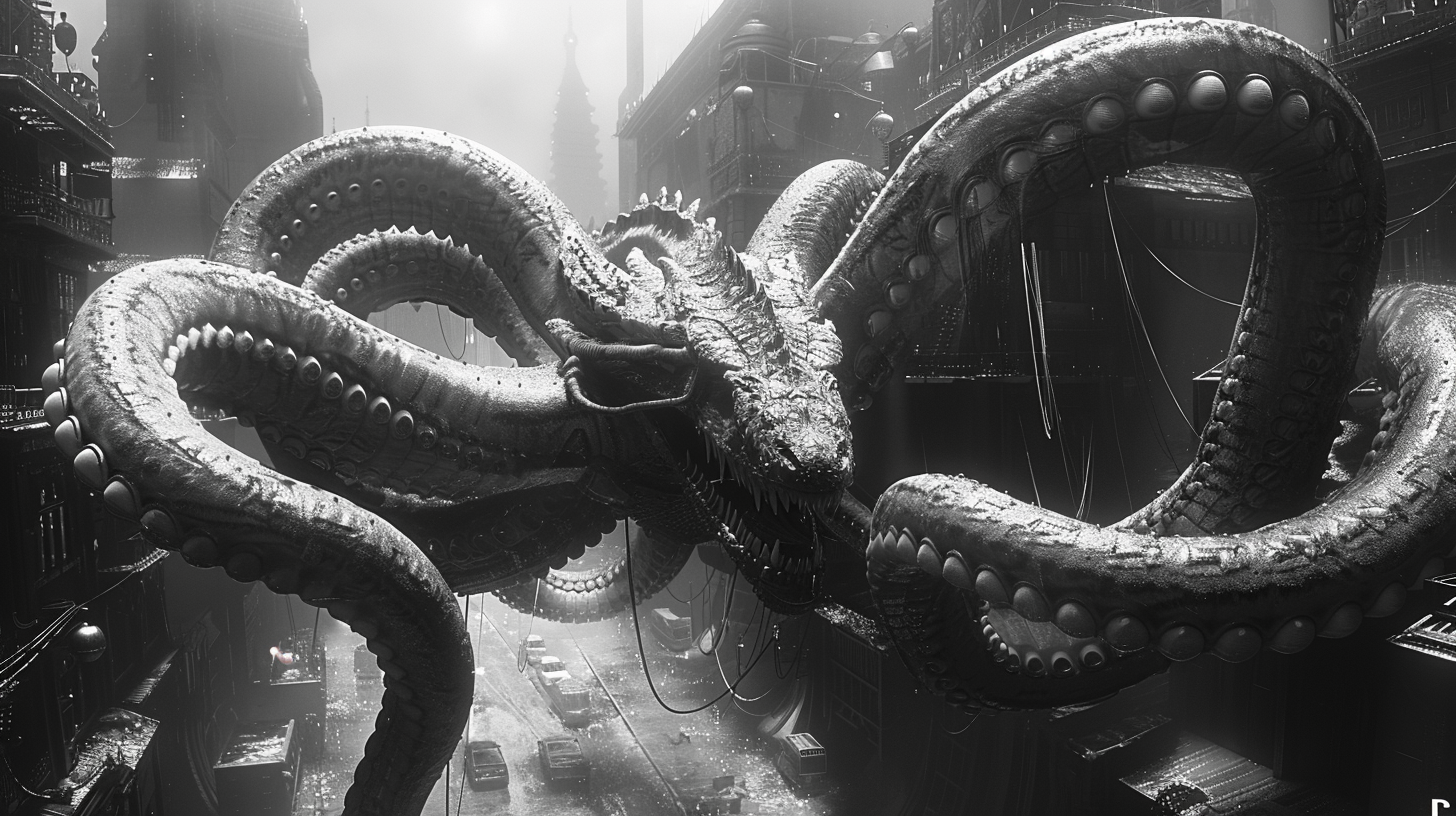

We should all be working hard to avoid the AI ouroboros which would creating a huge amount of data corruption and digital decay for humanity and the future AIs. Let us all avoid a future apocalyptic digital landscape by working hard to pay creative humans, offer up data for training in responsible ways, and make the advancements made through the integration of humanity's knowledge be available to everyone.

Curse of Recursion

Ilia Shumailov, Zakhar Shumaylov, Yiren Zhao, Yarin Gal, Nicolas Papernot, Ross Anderson

Stable Diffusion revolutionised image creation from descriptive text. GPT-2, GPT-3(.5) and GPT-4 demonstrated astonishing performance across a variety of language tasks. ChatGPT introduced such language models to the general public. It is now clear that large language models (LLMs) are here to stay, and will bring about drastic change in the whole ecosystem of online text and images. In this paper we consider what the future might hold. What will happen to GPT-{n} once LLMs contribute much of the language found online? We find that use of model-generated content in training causes irreversible defects in the resulting models, where tails of the original content distribution disappear. We refer to this effect as Model Collapse and show that it can occur in Variational Autoencoders, Gaussian Mixture Models and LLMs. We build theoretical intuition behind the phenomenon and portray its ubiquity amongst all learned generative models. We demonstrate that it has to be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web. Indeed, the value of data collected about genuine human interactions with systems will be increasingly valuable in the presence of content generated by LLMs in data crawled from the Internet.

The AI doesn't know if an AI is feeding it.

https://www.theverge.com/2023/7/25/23807487/openai-ai-generated-low-accuracy

OpenAI can’t tell if something was written by AI.

By Emilia David, a reporter who covers AI. Prior to joining The Verge, she covered the intersection between technology, finance, and the economy.

OpenAI shuttered a tool that was supposed to tell human writing from AI due to a low accuracy rate. ... OpenAI fully admitted the classifier was never very good at catching AI-generated text and warned that it could spit out false positives, aka human-written text tagged as AI-generated.

LLMs need lots of good, human generated, to become "AI"

Companies racing to develop more powerful artificial intelligence are rapidly nearing a new problem: The internet might be too small for their plans (non-paywalled link). From a report:_Ever more powerful systems developed by OpenAI, Google and others require larger oceans of information to learn from. That demand is straining the available pool of quality public data online at the same time that some data owners are blocking access to AI companies. Some executives and researchers say the industry's need for high-quality text data could outstrip supply within two years, potentially slowing AI's development.

AI companies are hunting for untapped information sources, and rethinking how they train these systems. OpenAI, the maker of ChatGPT, has discussed training its next model, GPT-5, on transcriptions of public YouTube videos, people familiar with the matter said. Companies also are experimenting with using AI-generated, or synthetic, data as training material -- an approach many researchers say could actually cause crippling malfunctions. These efforts are often secret, because executives think solutions could be a competitive advantage.

Data is among several essential AI resources in short supply. The chips needed to run what are called large-language models behind ChatGPT, Google's Gemini and other AI bots also are scarce. And industry leaders worry about a dearth of data centers and the electricity needed to power them. AI language models are built using text vacuumed up from the internet, including scientific research, news articles and Wikipedia entries. That material is broken into tokens -- words and parts of words that the models use to learn how to formulate humanlike expressions._

Eat Google's lunch by giving away other people's lunches with coupons inserted inside.

Generative AI search engine Perplexity, which claims to be a Google competitor and recently snagged a $73.6 million Series B funding from investors like Jeff Bezos, is going to start selling ads, the company told ADWEEK. Perplexity uses AI to answer users' questions, based on web sources. It incorporates videos and images in the response and even data from partners like Yelp. Perplexity also links sources in the response while suggesting related questions users might want to ask. These related questions, which account for 40% of Perplexity's queries, are where the company will start introducing native ads, by letting brands influence these questions, said company chief business officer Dmitry Shevelenko. When a user delves deeper into a topic, the AI search engine might offer organic and brand-sponsored questions. Perplexity will launch this in the upcoming quarters, but Shevelenko declined to disclose more specifics. While Perplexity touts on its site that search should be "free from the influence of advertising-driven models," advertising was always in the cards for the company. "Advertising was always part of how we're going to build a great business," said Shevelenko.